Chatbots gone rogue: How weak chatbot security enables bad actors

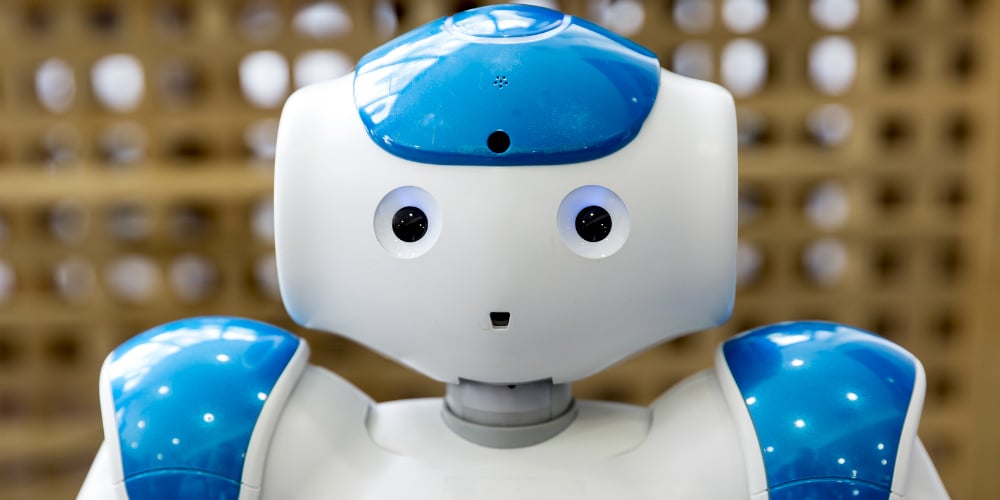

Over a short time span, chatbots have become standard practice in customer service. Services from basic troubleshooting advice to full-fledged payment services are available to consumers with minimal intervention from human staff. As with any automated process, great care should be taken to make chatbots robust and secure. As they become more sophisticated, the potential for malfunction or even exploitation has increased. This raises the stakes for chatbot providers and users. It also raises the question—what could go wrong if I use an unsafe chatbot?

In 2017, German regulators raised a red flag about a line of children’s toys. The government found that these dolls could be used as a clandestine surveillance device, which is illegal under German law. The dolls were marketed as remarkable new technology when, in actuality, they were just a chatbot. Children could ask the doll questions and get answers based on the doll’s fictional life. Concerns arose when parents realized that their children were having lengthy, if somewhat one-sided, conversations with the doll. The children believed they were having a private chat, but all of that data was being sent to the toy company’s chatbot operator. In addition, several different consumer awareness groups and tech organizations demonstrated that the Bluetooth receiver in the doll was not secure. It could be paired with any phone from up to fifty feet away by anyone who knew how. That phone would then be able to access the microphone and speakers embedded in the doll, listening and speaking through it. It’s no surprise that after the German ban went into effect, owners were told to destroy the doll or either face a heavy fine or a two-year jail sentence.

The doll’s chatbot was vulnerable to what’s called a “man-in-the-middle” attack, in which a third party can access a chatbot conversation. They can then passively monitor the chatlog or even alter the messages sent, perhaps to carry out a phishing attack or trick the user into divulging sensitive information. This is far from the only way a malicious party can take advantage of a chatbot, however.

continue reading »